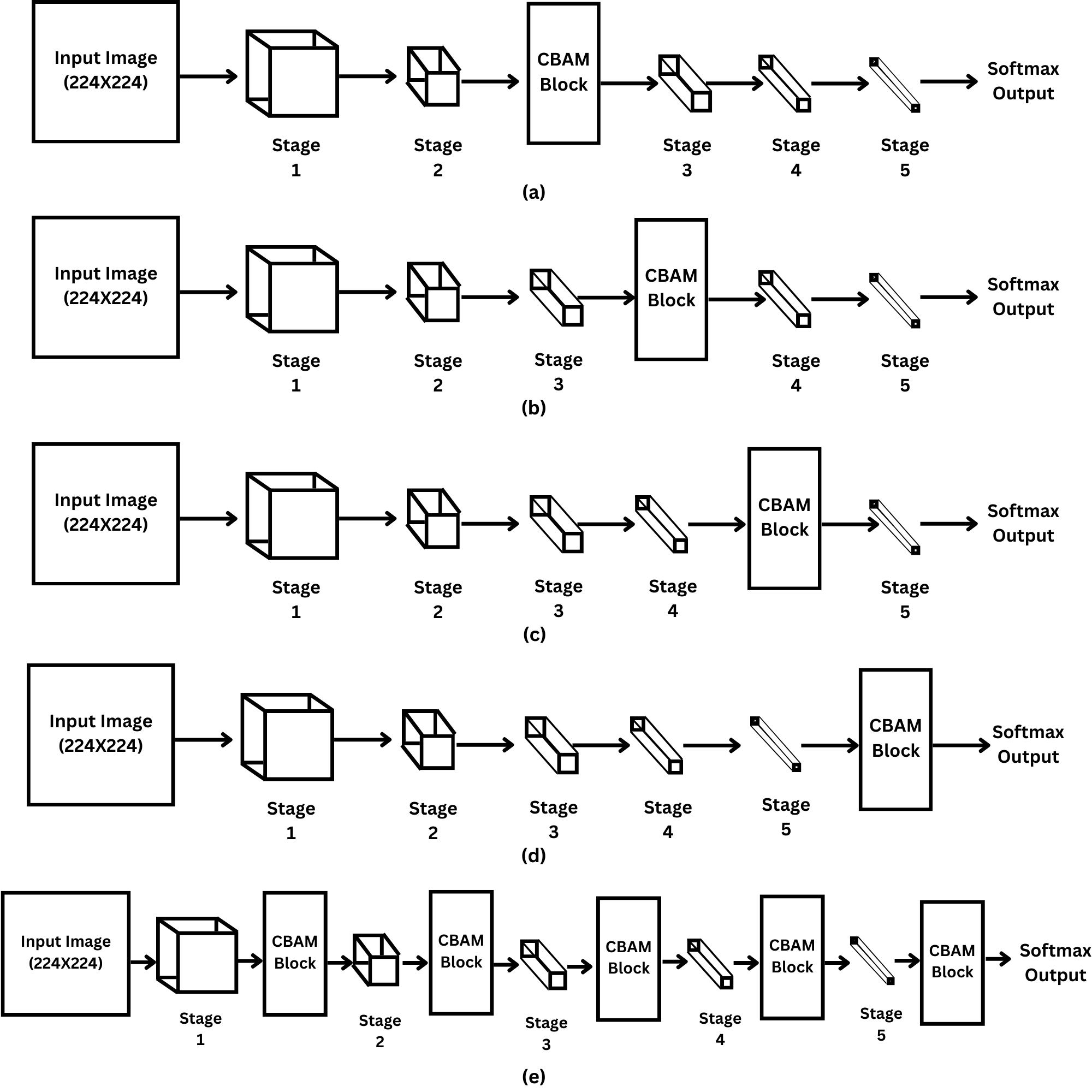

Investigation on Placement of CBAM Module in Resnet-18 Architecture

Convolutional Neural Networks (CNNs) have revolutionized computer vision tasks by automatically learning hierarchical representations from raw input data. Despite their success, CNNs struggle with effectively capturing contextual information across different dimensions within the network. Attention mechanisms, particularly the Convolutional Block Attention Module (CBAM), have emerged as a solution to enhance CNNs' performance by enabling them to focus on relevant regions and features while suppressing irrelevant ones. In this paper, we investigate the optimal placements of the CBAM module within the ResNet-18 architecture, a widely used CNN architecture. Our experiments conducted on three diverse datasets- MIT Indoor Scenes Dataset, CIFAR-10 dataset, and MNIST dataset- aim to explore the impact of CBAM placement on model performance. We address key research questions regarding the influence of CBAM placement on accuracy, convergence speed, and the model's ability to capture contextual information. Through rigorous experimentation and analysis, we provide insights into the integration of attention mechanisms within CNN architectures, contributing to advancements in computer vision tasks.